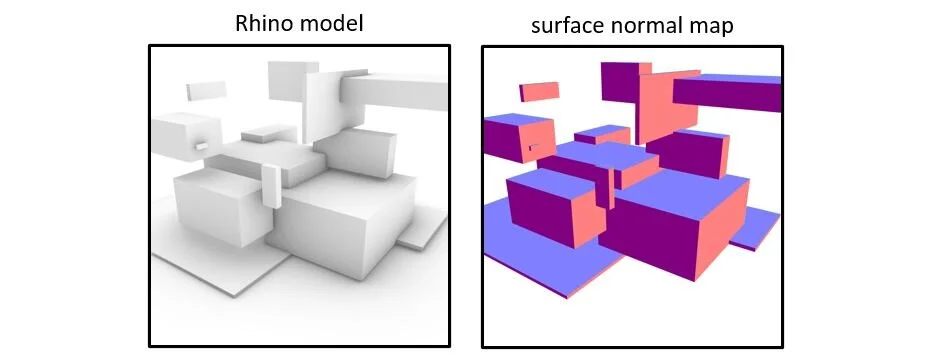

My goal is to train a Pix2PixHD model that can take an architectural drawing and synthesize a surface normal map. Surface normal mapping can be done in a Rhino/Grasshopper environment by taking a model, deconstructing it into a mesh, and coloring the surfaces so that each orientation is displayed with a different color. This is the type of analysis that I want to apply to any raw image.

Creating the Rhino Dataset

First, I generate a Rhino model of random geometry, or import an existing Rhino model. I create a list of camera target points and camera location points, and then use Grasshopper to cycle through every camera angle on the list, and print an image from every angle. I do this process once on a rendered display mode, and a second time with the surface normal display mode. The resulting dataset is a couple hundred paired images, with the rendered view (A) and the corresponding surface normal mask (N).

Training the Model

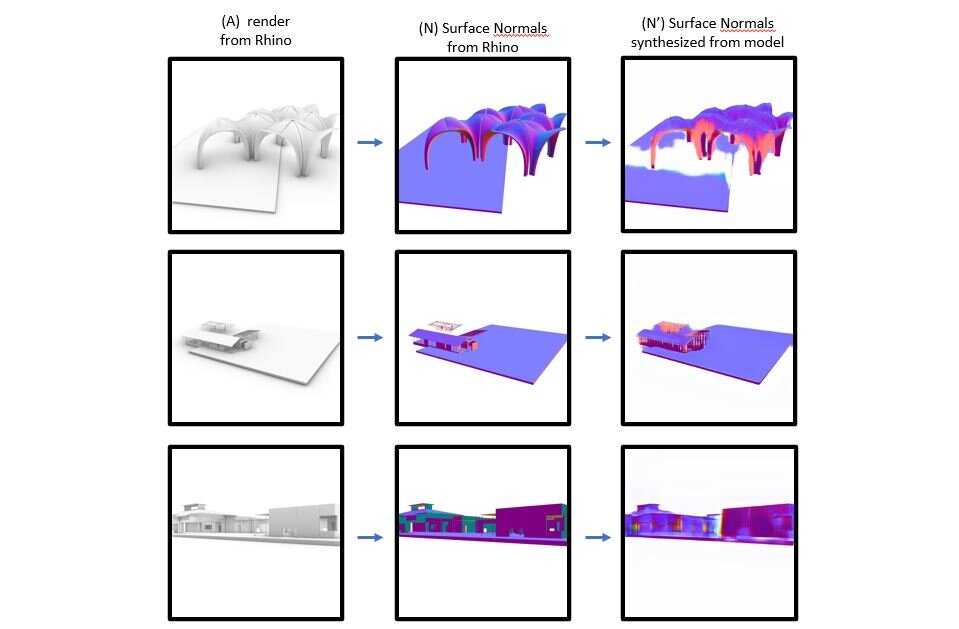

I used NVIDIA’s Pix2PixHD to train a new model that learns to synthesize surface normal masks from the original rendered image.

After a few hours of training, I try a few test images. Here are some cherry-picked examples that make it look like it’s working. The results are better when the input image resembles the Rhino rendered display mode used in training. While the model doesn’t have a strict comprehension of orientation, it’s generally grasped the concept that the main surface orientation is a light purple, and the perpendicular surfaces are pink and violet. It has a concept of white space being the unlabeled background.

This is an early proof of concept, with only 500 non-diverse training images, and a short training time, so I expect results to improve with subsequent trainings. I’m repeating this process with depth maps, line maps, and object segmentation. This should result in a set of Pix2PixHD models that can take any image and produce an imperfect set of custom drawing analyses. The goal is to use those analyses, and reverse the process to synthesize more realistic architectural drawings.