These are experiments in using Stable Diffusion as an image-to-image pipeline. Essentially, a kind of image style transfer using text prompts.

I’m using this huggingface/diffusers repository: https://github.com/huggingface/diffusers

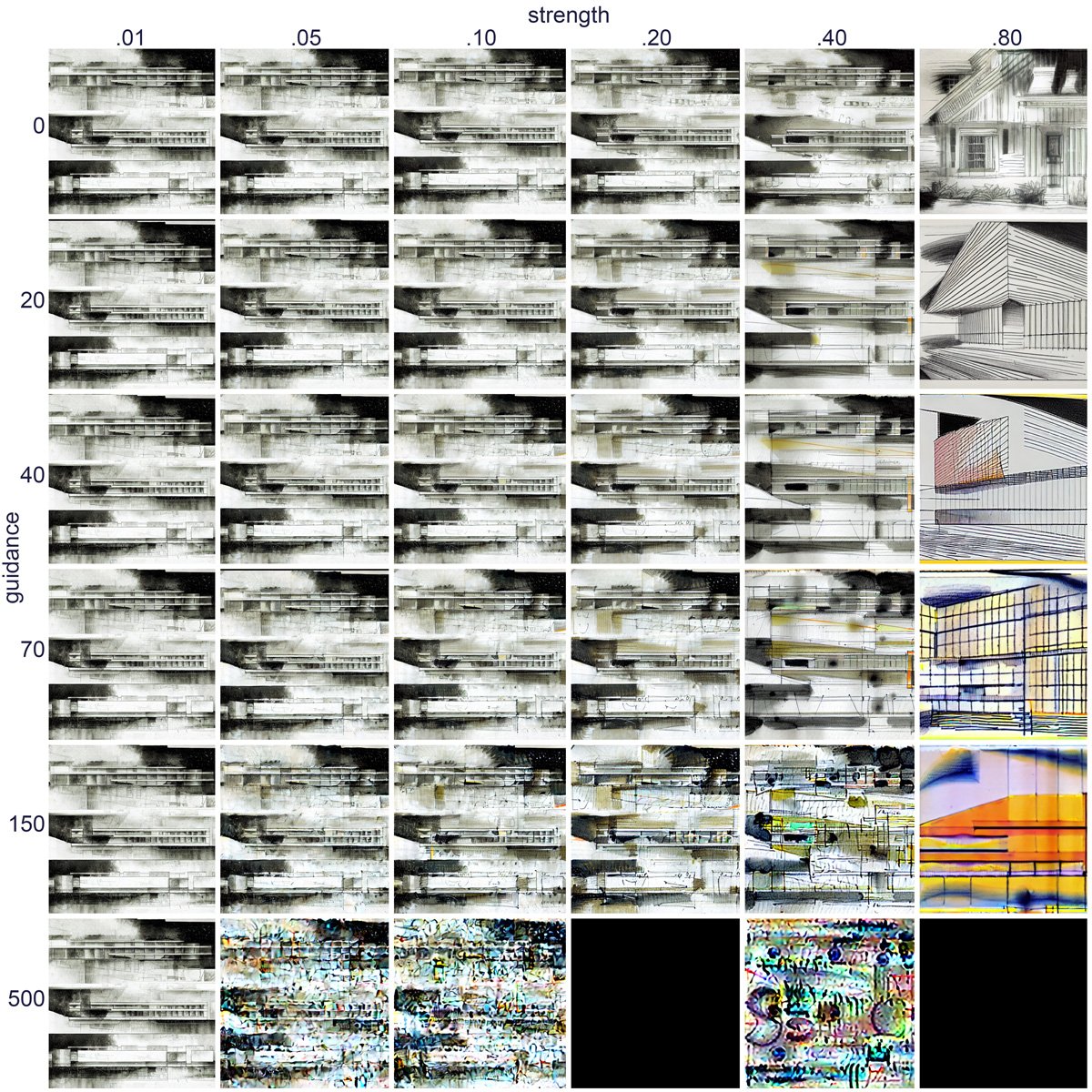

The stable diffusion model takes an initial image, a text prompt, a random seed, a strength value, and a guidance scale value. The following grid shows the results of passing an image through varying strength and guidance scales, all with the same text prompt and seed. The randomly generated prompt in this case is: “a page of yellow section perspective with faint orange lines”.

An increasing strength value adds greater variation to the initial image. At less than 0.05, it hardly effects the initial image, but past .50, it starts to become unrecognizable.

An increasing guidance scale increases the adherence of the image to the prompt. Beyond 100, the image quality becomes… radical.

Some squares get blacked out because some aspect of the generated image gets flagged as potentially NSFW. This is more common with high strength and guidance values.

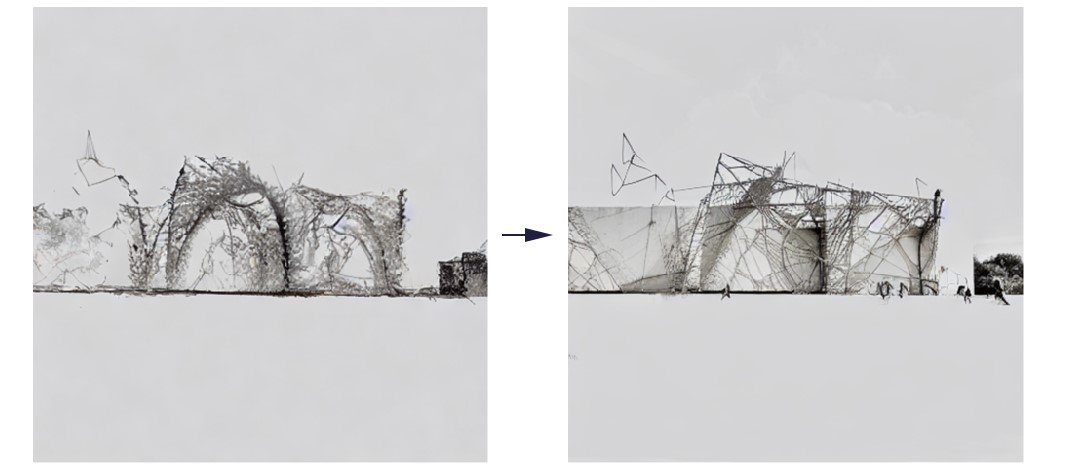

I readjusted the range of both strength and guidance, regenerated the prompt to: "detailed architectural photograph with minimalist circular people everywhere", and tried a new initial image.

The following are made with a random seed and randomly generated prompt for each iteration. The prompts are built with variable adjectives, subjects, drawing types, drawing mediums, etc, generally architectural in nature.

This range tends to be the best for the kind of image style transfer I’m looking for. Anything to the left is too similar to the original image, and anything to the right is too different.